Running command: /usr/sbin/wipefs -all /dev/vdb1 This issue still seems to be present in 14.0.1: CEPH_VOLUME_DEBUG=1 ceph-volume lvm zap /dev/vdb1 RuntimeError: command returned non-zero exit status: 1 Terminal.dispatch(self.mapper, self.argv)įile "/usr/lib/python2.7/site-packages/ceph_volume/devices/lvm/zap.py", line 169, in mainįile "/usr/lib/python2.7/site-packages/ceph_volume/decorators.py", line 16, in is_rootįile "/usr/lib/python2.7/site-packages/ceph_volume/devices/lvm/zap.py", line 102, in zapįile "/usr/lib/python2.7/site-packages/ceph_volume/devices/lvm/zap.py", line 21, in wipefsįile "/usr/lib/python2.7/site-packages/ceph_volume/process.py", line 149, in run Terminal.dispatch(self.mapper, subcommand_args)įile "/usr/lib/python2.7/site-packages/ceph_volume/terminal.py", line 182, in dispatchįile "/usr/lib/python2.7/site-packages/ceph_volume/devices/lvm/main.py", line 38, in main exception caught by decoratorįile "/usr/lib/python2.7/site-packages/ceph_volume/decorators.py", line 59, in newfuncįile "/usr/lib/python2.7/site-packages/ceph_volume/main.py", line 153, in main stderr wipefs: error: /dev/sdb: probing initialization failed: Device or resource busy Running command: /usr/sbin/pvs -no-heading -readonly -separator=" " -o pv_name,pv_tags,pv_uuid,vg_name stderr WARNING: Failed to connect to lvmetad.

Running command: /usr/sbin/lvs -noheadings -readonly -separator=" " -o lv_tags,lv_path,lv_name,vg_name,lv_uuid

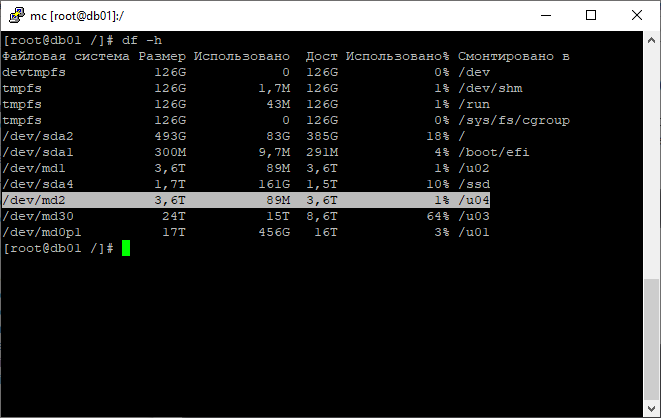

Running command: ceph-volume lvm zap /dev/sdb > RuntimeError: command returned non-zero exit status: /]# ps faux > RuntimeError: command returned non-zero exit status: /]# dfįilesystem 1K-blocks Used Available Use% Mounted on

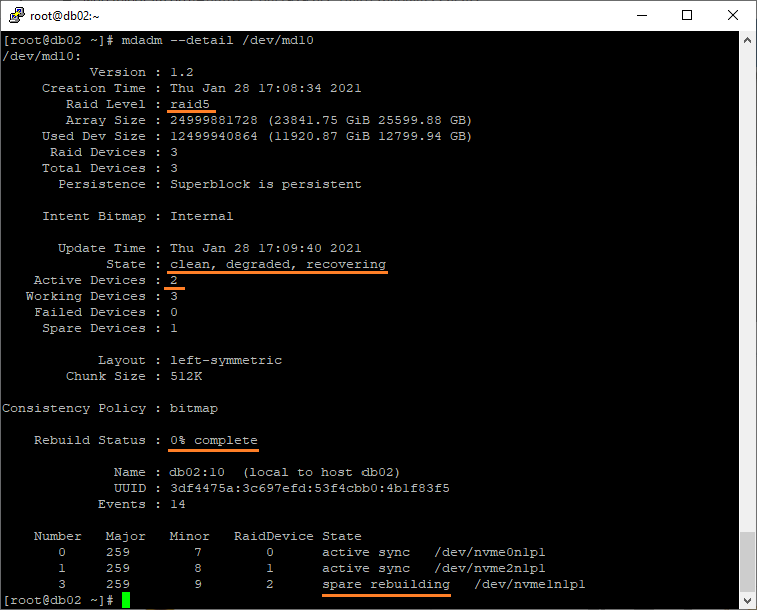

Stderr: wipefs: error: /dev/sdb: probing initialization failed: Device or resource busy Running command: /usr/sbin/wipefs -all /dev/sdb I have made a small test, and it looks like result is the same.USER PID %CPU %MEM VSZ RSS TTY STAT START TIME COMMANDĬeph 1616 0.1 3.8 845844 19340 ? Ssl 12:31 0:06 ceph-osd -i 1 -setuser ceph -setgroup disk Here I have raid made of /dev/sda3 and /dev/sdb3.

Is it neccesary to use both? How do they differ in result?

Wipefs force how to#

I saw instructions about how to remove software raid, and some of them contain both instructions: wipefs -af and mdadm -zero-superblock -force.

0 kommentar(er)

0 kommentar(er)